New Delhi:Recently, a new research out, is underscoring what other experts have reported or at least suspected before: facial recognition technology is subject to biasesand shows inaccuracies in gender identification based on the data sets provided and the conditions in which algorithms are created.

As per the results, gender was misidentified in less than 1% lighter-skinned males as compared to 35% dark-skinned females.

Facial recognition has the potential to revolutionise the security of our devices, and it's already available on some very popular gadgets.But what is it, how does it work, and is it racist? Let’s know everything here:

What is facial recognition?

Once the preserve of science-fiction movies; facial recognition is now a, mainstream technology.

Basically, it's a way of identifying or verifying who a person by scanning their face with a computer.

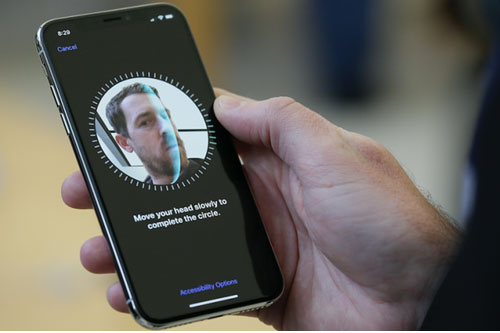

Its main use is to make sure a person is who they say there are – like Apple's Face ID, which uses facial recognition to unlock the new iPhone X.

But it can also be used to identify people in crowds. Chinese police are using smart glasses linked up to a database of faces to spot criminals at railway stations, for instance.

How does it work?

There are lots of different ways facial recognition can work.For example, some Samsung phones use iris scanners to verify your identity using your eyes.

But the most common method of facial recognition will identify features on your entire face.

Apple's Face ID for the iPhone X is probably the most well-known facial recognition system, and it's not massively complicated.

Here are the steps your phone takes:

- The phone will use various sensors to work out how much light it needs to illuminate your face

- It then floods your face with infrared light, which is outside the visible spectrum of light

- A dot projector will produce more than 30,000 dots of this invisible light, creating a 3D map of your face

- An infrared camera will then capture images of this dot pattern

Once your phone has all that info, it can use your face's defining features – like your cheekbone shape, or the distance between your eyes – to verify your identity.

It computes a score between 0 and 1, and the closer it is to 1, the more likely it is that your face is the same as the one stored on your Phone.

But, as facial recognition systems become more popular, criticisms of the tech are growing.

One of the biggest concerns is about how facial recognition is "racist", potentially because many of the people creating these systems are white males.

Back in December, it emerged that a mum and her son – both Chinese – were both able to access the same iPhone X using Face ID.

Google was also implicated in a facial recognition fail, after the company's Photos app algorithm automatically tagged two black people as "gorillas".

To fix this, Google ditched tagging for photos of gorillas and chimpanzees, in a bid to make sure it never happened again.

More recently, the MIT Media Lab found that facial recognition was biased towards white males.The research was conducted using facial recognition systems of Microsoft, IBM, and China's Megvii.

Although, facial recognition technology is improving by leaps and bounds; some commercial software can now tell the gender of a person in a photograph.

According to a study that tested three different facial recognition systems (including one from Microsoft), the gender of white men was accurately identified 99% of the time.

For white women, this fell to 93%; and for darker-skinned men, the figure was 92%.

But the darker the skin, the more errors and misidentification arise — up to nearly 35 percent for images of darker skinned women, according to the study that breaks fresh ground by measuring how the technology works on people of different races and gender.

It's unlikely that these examples are due to racist developers creating malicious algorithms. These disparate results, calculated by Joy Buolamwini, a researcher at the M.I.T. Media Lab, show how some of the biases in the real world can seep into artificial intelligence, the computer systems that inform facial recognition.

It's also entirely possible that tracking features on darker skin tones is more difficult, and that developers may need to work harder to correct these apparent biases.

In any case, it's never been proven that facial recognition systems are purposefully "racist", so it's worth taking any such claims with a pinch of salt.

References:

https://www.thesun.co.uk

https://www.nytimes.com

https://www.media.mit.edu/